Create random files with random content with Java

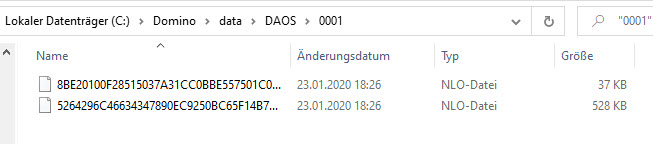

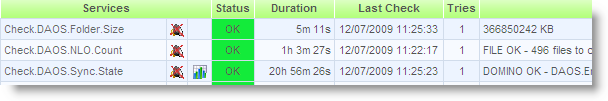

I was playing with DAOS in Domino 12 recently and needed a way to create thousands of test files with a given file size and random content.

I did not want to use existing files with real data for my tests. There are several programs available for Linux and Windows. Google for it. But as a developer, I should be able to create my one tool.

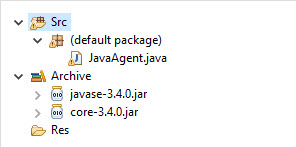

Here is some sample Java code that uses java.util.Random to create filnames and content in an easy way.

package de.eknori;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.Random;

public class DummyFileCreator {

static int filesize = 129 * 1024;

static int count = 9500;

static File dir = new File("c:\\temp\\dummy\\");

static String ext = ".txt";

public static void main(String[] args) {

byte[] bytes = new byte[filesize];

BufferedOutputStream bos = null;

FileOutputStream fos = null;

try {

for (int i = 0; i < count; i++) {

Random rand = new Random();

String name = String.format("%s%s", System.currentTimeMillis(), rand.nextInt(100000) + ext);

File file = new File(dir, name);

fos = new FileOutputStream(file);

bos = new BufferedOutputStream(fos);

rand.nextBytes(bytes);

bos.write(bytes);

bos.flush();

bos.close();

fos.flush();

fos.close();

}

} catch (FileNotFoundException fnfe) {

System.out.println("File not found" + fnfe);

} catch (IOException ioe) {

System.out.println("Error while writing to file" + ioe);

} finally {

try {

if (bos != null) {

bos.flush();

bos.close();

}

if (fos != null) {

fos.flush();

fos.close();

}

} catch (Exception e) {

System.out.println("Error while closing streams" + e);

}

}

}

}

The code is self-explanatory. Adjust the variables to your own needs, and you are ready to go